Essay Grader Solution

Responsive learning environments typically involve frequent formative assessments in order to gauge how well students are absorbing classroom instruction. In order to handle the corresponding high volume of paper grading, many teachers rely on test grader apps that can expedite the scoring process.

However, in English, history, and other humanities classes that tend to have more essay assignments, oral presentations, and project-based work, teacher-graded rubrics are a more effective approach to evaluating performance and comprehension. That appears to rule out standard bubble form graders for teachers in those subject areas, leaving them without any grading assistance at all.

Scoring with rubrics

Rubrics establish a guide for evaluating the quality of student work. Whether scoring an essay or research paper , a live performance or art project, or other student-constructed responses, rubrics clearly delineate the various components of the assignment to be graded and the degree of success achieved within each of those areas.

These expectations are communicated to the student at the beginning of the assignment and then scored accordingly by the teacher upon its completion. The dilemma that arises is how to simplify and speed up that grading process when score determination must be done directly by the teacher.

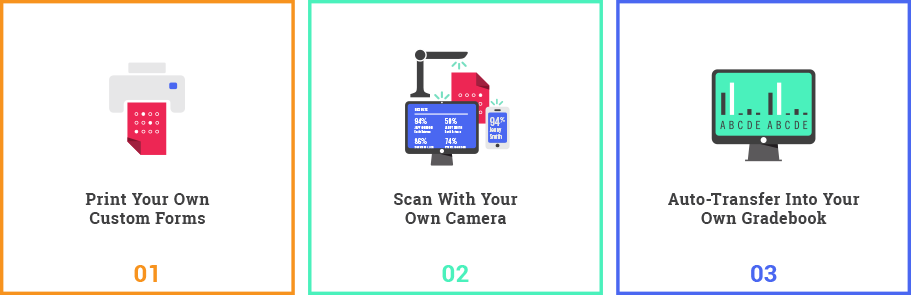

ASSESSMENT MADE EASY

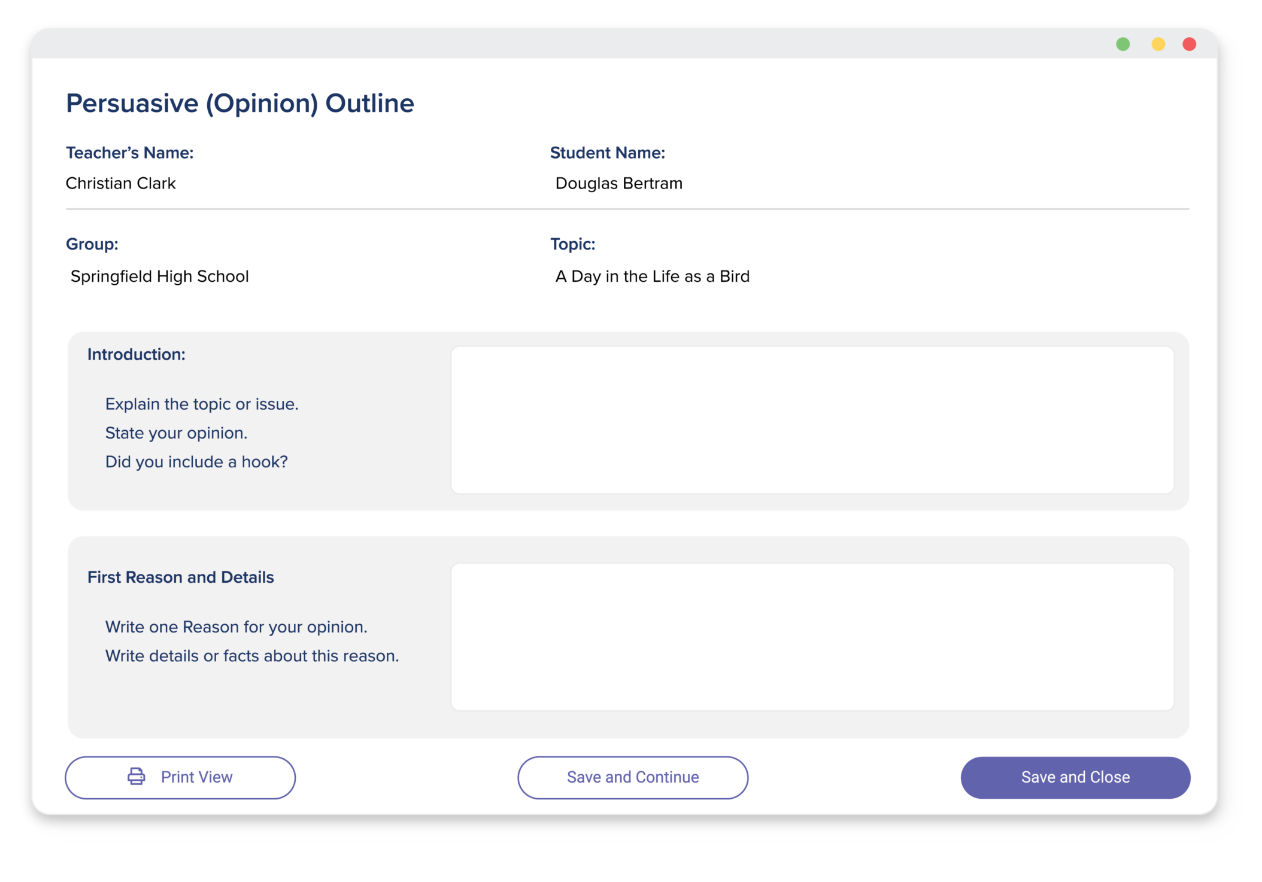

Because GradeCam was the brainchild of experienced teachers, creating a solution for handling time-consuming rubric assignments was a priority. Obviously, there is a certain amount of teacher time required to score these assignments that simply can’t be avoided, but there is also a way to streamline this process and save time on the backend.

Rather than using student-completed answer forms like with regular tests, GradeCam allows teachers to create teacher-completed rubric forms that can be quickly and easily filled in using “The Bingo Method” and then scanned and recorded automatically. This speeds up the assignment and transfer of grades, as well as the data generation necessary to review and respond to areas of concern.

Easy Grader Highlights:

Try Gradient Teacher Premium free for 60 days.

Find the solution that’s right for you.

The world’s leading AI platform for teachers to grade essays

EssayGrader is an AI powered grading assistant that gives high quality, specific and accurate writing feedback for essays. On average it takes a teacher 10 minutes to grade a single essay, with EssayGrader that time is cut down to 30 seconds That's a 95% reduction in the time it takes to grade an essay, with the same results.

How we've done

Happy users

Essays graded

EssayGrader analyzes essays with the power of AI. Our software is trained on massive amounts of diverse text data, inlcuding books, articles and websites. This gives us the ability to provide accurate and detailed writing feedback to students and save teachers loads of time. We are the perfect AI powered grading assitant.

EssayGrader analyzes essays for grammar, punctuation, spelling, coherence, clarity and writing style errors. We provide detailed reports of the errors found and suggestions on how to fix those errors. Our error reports help speed up grading times by quickly highlighting mistakes made in the essay.

Bulk uploading

Uploading a single essay at a time, then waiting for it to complete is a pain. Bulk uploading allows you to upload an entire class worth of essays at a single time. You can work on other important tasks, come back in a few minutes to see all the essays perfectly graded.

Custom rubrics

We don't assume how you want to grade your essays. Instead, we provide you with the ability to create the same rubrics you already use. Those rubrics are then used to grade essays with the same grading criteria you are already accustomed to.

Sometimes you don't want to read a 5000 word essay and you'd just like a quick summary. Or maybe you're a student that needs to provide a summary of your essay to your teacher. We can help with our summarizer feature. We can provide a concise summary including the most important information and unique phrases.

AI detector

Our AI detector feature allows teachers to identify if an essay was written by AI or if only parts of it were written by AI. AI is becoming very popular and teachers need to be able to detect if essays are being written by students or AI.

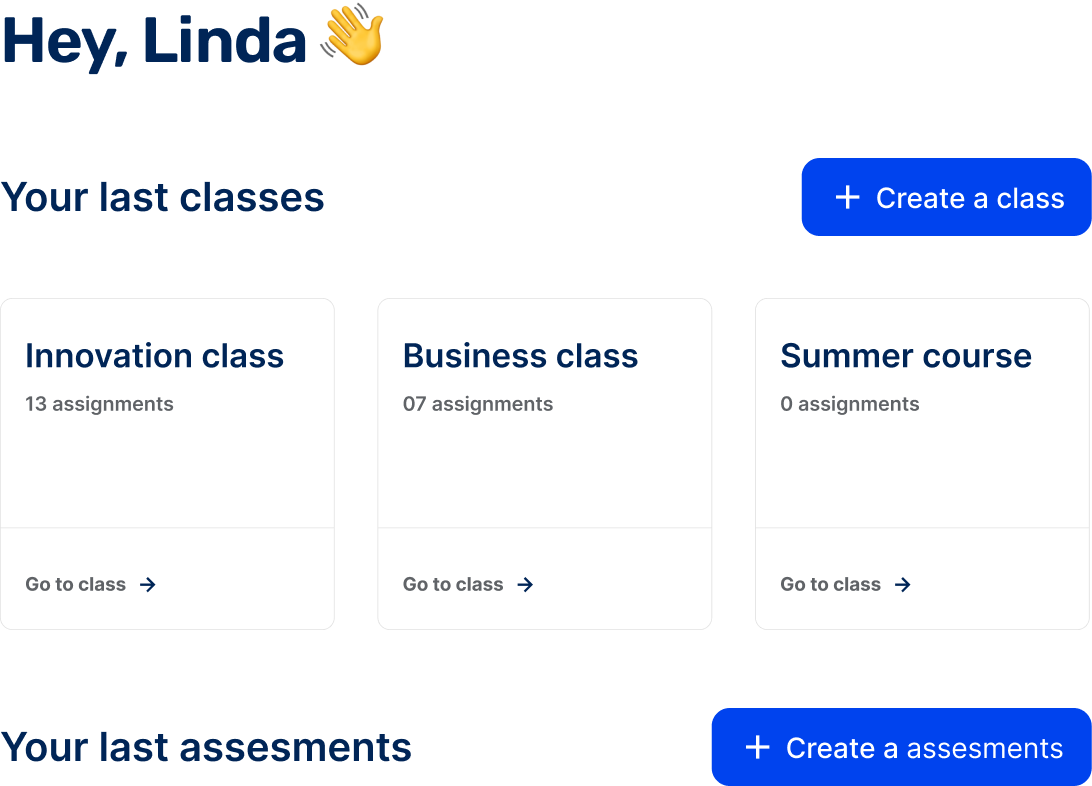

Create classes to neatly organize your students essays. This is an essential feature when you have multiple classes and need to be able to track down students essays quickly.

Our mission

At EssayGrader, our mission is crystal clear: we're transforming the grading experience for teachers and students alike. Picture a space where teachers can efficiently and accurately grade essays, lightening their workload, while empowering students to enhance their writing skills. Our software is a dynamic work in progress, a testament to our commitment to constant improvement. We're dedicated to refining and enhancing our platform continually. With each update, we strive to simplify the lives of both educators and learners, making the process of grading and writing essays smoother and more efficient.We recognize the immense challenges teachers face – the heavy burdens, the long hours, and the often underappreciated efforts. EssayGrader is our way of shouldering some of that load. We are here to support you, to make your tasks more manageable, and to give you the tools you need to excel in your teaching journey.

Join the newsletter

Subscribe to get our latest content by email.

AI Essay Grader

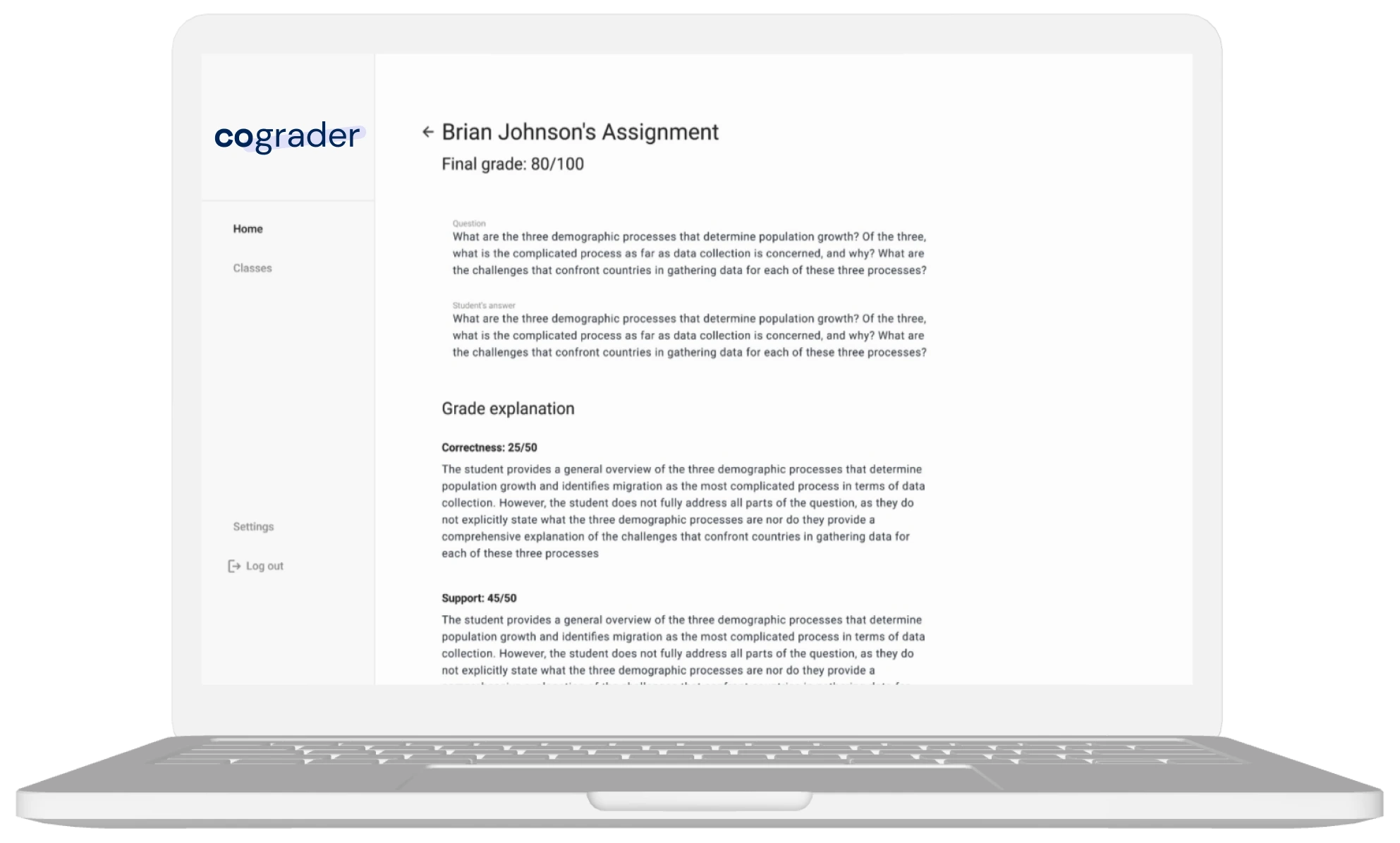

CoGrader is an AI Essay Grader that helps teachers save 80% of the time grading essays with instant first-pass feedback & grades, based on your rubrics.

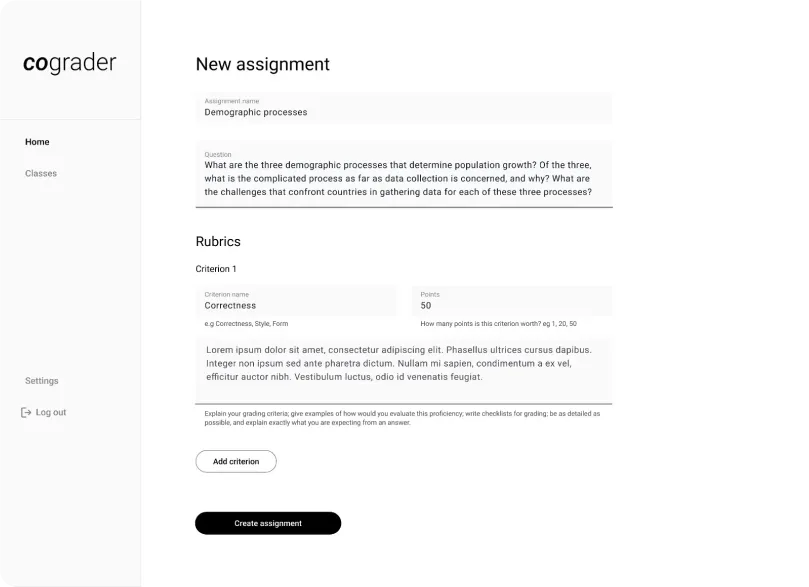

Pick your own Rubrics to Grade Essays with AI

We have +30 Rubrics in our Library - but you can also build your own rubrics.

Argumentive Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Claim/Focus, Support/Evidence, Organization and Language/Style.

Informative Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Clarity/Focus, Development, Organization and Language/Style.

Narrative Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Plot/Ideas, Development/Elaboration, Organization and Language/Style.

Analytical Essays

Rubrics from 6th to 12th Grade and Higher Education. Grades Claim/Focus, Analysis/Evidence, Organization and Language/Style.

AP Essays, DBQs & LEQs

Grade Essays from AP Classes, including DBQs & LEQs. Grades according to the AP rubrics.

+30 Rubrics Available

You can also build your own rubric/criteria.

Your AI Essay Grading Tool

It's a never-ending task that consumes valuable time and energy, often leaving teachers frustrated and overwhelmed

With CoGrader, grading becomes a breeze. You will have more time for what really matters: teaching, supporting students and providing them with meaningful feedback.

Meet your AI Grader

Leverage Artificial Intelligence (AI) to get First-Pass Feedback on your students' assignments instantaneously, detect ChatGPT usage and see class data analytics.

Save time and Effort

Streamline your grading process and save hours or days.

Ensure fairness and consistency

Remove human biases from the equation with CoGrader's objective and fair grading system.

Provide better feedback

Provide lightning-fast comprehensive feedback to your students, helping them understand their performance better.

Class Analytics

Get an x-ray of your class's performance to spot challenges and strengths, and inform planning.

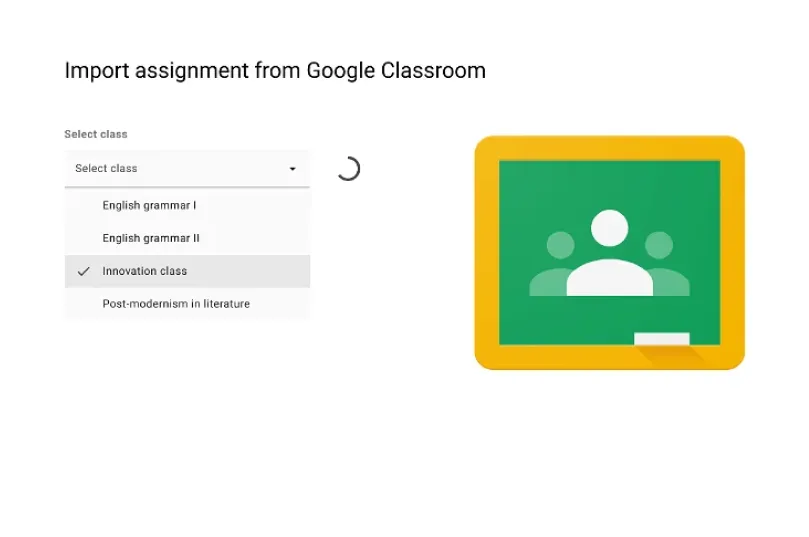

Google Classroom Integration

Import assignments from Google Classroom to CoGrader, and export reviewed feedback and grades back to it.

Canvas and Schoology compatibility

Export your assignments in bulk and upload them to CoGrader with one click.

Used at 1000+ schools

Backed by UC Berkeley

Teachers love CoGrader

How does CoGrader work?

It's easy to supercharge your grading process

Import Assignments from Google Classroom

CoGrader will automatically import the prompt given to the students and all files they have turned in.

Define Grading Criteria

Use our rubric template, based on your State's standards, or set up your own grading criteria to align with your evaluation standards, specific requirements and teaching objectives. Built in Rubrics include Argument, Narrative and Informative pieces.

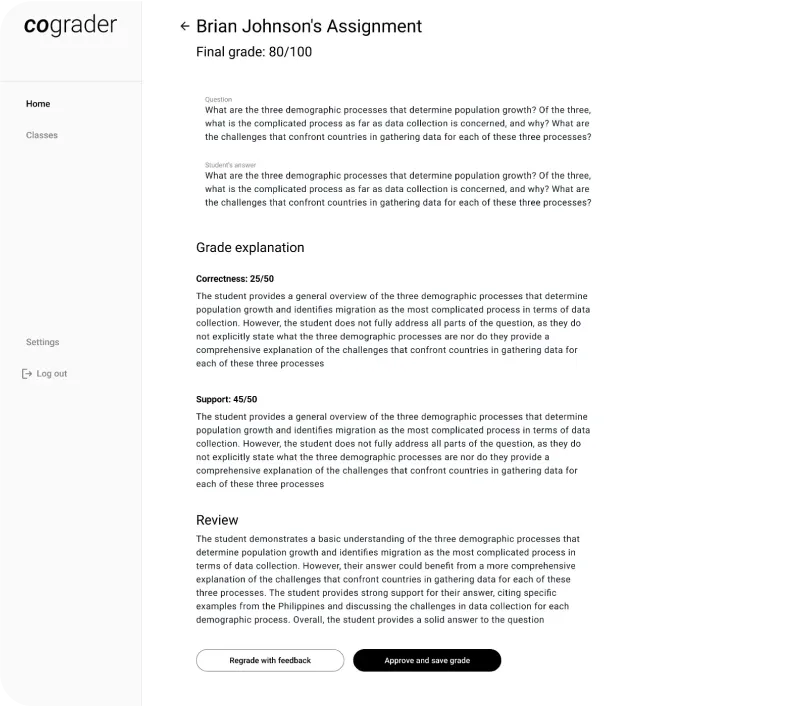

Get Grades, Feedback and Reports

CoGrader generates detailed feedback and justification reports for each student, highlighting areas of improvement together with the grade.

Review and Adjust

The teacher has the final say! Adjust the grades and the feedback so you can make sure every student gets the attention they deserve.

Want to see what Education looks like in 2023?

Get started right away, not sure yet, frequently asked questions.

CoGrader: the AI copilot for teachers.

You can think of CoGrader as your teaching assistant, who streamlines grading by drafting initial feedback and grade suggestions, saving you time and hassle, and providing top notch feedback for the kids. You can use standardized rubrics and customize criteria, ensuring that your grading process is fair and consistent. Plus, you can detect if your student used ChatGPT to answer the assignment.

CoGrader considers the rubric and your grading instructions to automatically grade and suggest feedback, using AI. Currently CoGrader integrates with Google Classroom and will soon integrate with other LMS. If you don't use Google Classroom, let your LMS provider know that you are interested, so they speed up the process.

Try it out! We have designed CoGrader to be user-friendly and intuitive. We offer training and support to help you get started. Let us know if you need any help.

Privacy matters to us and we're committed to protecting student privacy. We are FERPA-compliant. We use student names to match assignments with the right students, but we quickly change them into a code that keeps the information private, and we get rid of the original names. We don't keep any other personal information about the students. The only thing we do keep is the text of the students' answers to assignments, because we need it for our grading service. This information is kept safe using Google’s secure system, known as OAuth2, which follows all the rules to make sure the information stays private. For a complete understanding of our commitment to privacy and the measures we take to ensure it, we encourage you to read our detailed privacy policy.

CoGrader finally allows educators to provide specific and timely feedback. In addition, it saves time and hassle, ensures consistency and accuracy in grading, reduces biases, and promotes academic integrity.

Soon, we'll indicate whether students have used ChatGPT or other AI systems for assignments, but achieving 100% accurate detection is not possible due to the complexity of human and AI-generated writing. Claims to the contrary are misinformation, as they overlook the nuanced nature of modern technology.

CoGrader uses cutting-edge generative AI algorithms that have undergone rigorous testing and human validation to ensure accuracy and consistency. In comparisons to manual grading, CoGrader typically shows only a small difference of up to ~5% in grades, often less than the variance between human graders. Some teachers have noted that this variance can be influenced by personal bias or the workload of grading. While CoGrader works hard to minimize errors and offer reliable results, it is always a good practice to review and validate the grades (and feedback) before submitting them.

CoGrader is designed to assist educators by streamlining the grading process with AI-driven suggestions. However, the final feedback and grades remain the responsibility of the educator. While CoGrader aims for accuracy and fairness, it should be used as an aid, not a replacement, for professional judgment. Educators should review and validate the grades and feedback before finalizing results. The use of CoGrader constitutes acceptance of these terms, and we expressly disclaim any liability for errors or inconsistencies. The final grading decision always rests with the educator.

Just try it out! We'll guide you along the way. If you have any questions, we're here to help. Once you're in, you'll experience saving countless hours and procrastination, and make grading efficient, fair, and helpful.

- Assessment API

- Higher Education

- Personnel Testing

- Contact/Demo

Transforming Assessment With AI-Powered Essay Scoring

IntelliMetric ® delivers accurate, consistent, and reliable scores for writing prompts for K-12 school districts, for higher education, for personnel testing, and as an API for software.

IntelliMetric ® Is The Gold Standard In AI Scoring Of Written Prompts

Trusted by educational institutions for surpassing human expert scoring, IntelliMetric ® is the go-to essay scoring platform for colleges and universities. IntelliMetric ® also aids in hiring by identifying candidates with excellent communication skills. As an assessment API, it enhances software products and increases product value. Unlock its potential today.

Proven Capabilities Across Markets

Whether you’re a hiring manager, school district administrator, or higher education administrator, IntelliMetric® can help you meet your organization’s goals. Click below to learn how it works for your industry.

Powerful Features

IntelliMetric ® delivers relevant scores and expert feedback tailored to writers’ capabilities. IntelliMetric ® scores prompts of varying lengths, providing invaluable insights for both testing and writing improvement. Don't settle for less; unleash the power of IntelliMetric ® for scoring excellence.

IntelliMetric ® scores writing instantly to help organizations save time and money evaluating writing with the same level of accuracy and consistency as expert human scorers.

IntelliMetric ® can be used to either test writing skills or improve instruction by providing detailed and accurate feedback according to a rubric.

IntelliMetric ® gives teachers and business professionals the bandwidth to focus on other more impactful job duties by scoring writing prompts that would otherwise take countless hours each day.

Using Legitimacy detection, IntelliMetric ® ensures all writing is original without any plagiarism - and doesn’t contain any messages that diverge from the assigned writing prompt.

Case Studies and Testimonials

Below are use cases and testimonials from customers who used IntelliMetric ® to reach their goals by automating the process of analyzing and grading written responses. These users found IntelliMetric ® to be a vital tool in providing instant feedback and scoring written responses.

Santa Ana School Disctrict Through the use of IntelliMetric ® , the Santa Ana school district was able to evaluate student writing and their students were able to use the instantaneous feedback to drastically improve their writing. The majority of teachers found IntelliMetric to benefit their classrooms as an instructional tool and found that students were more motivated to write.

Santa Ana School District

I have worked with Vantage Learning’s MY Access Automated Essay Scoring product both as a teacher and as a secondary ELA Curriculum specialist for grades 6-12. As a teacher, I immediately saw the benefits of the program. My students were more motivated to write because they knew that they would receive immediate feedback upon completion and submission of their essays. I also taught my students how to use the “My Tutor” and “My Editor” feedback in order to revise their essays. In the past, I felt like Sisyphus pushing a boulder up the hill, but with MY Access that heavy weight was lifted and my students were revising using specific feedback from My Access and consequently their writing drastically improved. When it comes to giving instantaneous feedback, MY Access performed more efficiently than myself.

More than 350 research studies conducted both in-house and by third-party experts have determined that IntelliMetric® has levels of consistency, accuracy and reliability that meet, and more often exceed, those of human expert scorers. After performing the practice DWA within SAUSD, I surveyed our English teachers and asked them about their recent experience with MY Access. Of the 85 teachers that responded to the survey, 82% of the teachers felt that their students’ experience with MY Access was either fair, good or very good. Similarly, 75% of the teachers thought the accuracy of the scoring was fair, good, or very good. Lastly, 77% of the teachers surveyed said that they would like to use MY Access in their classrooms as an instructional tool.

Many of the teachers’ responses to the MY Access survey included a call for a plagiarism detector. At the time, we had not opted for the addition of Cite Smart, the onboard plagiarism detector for MY Access This year, however, we will be using it and teachers across the district are excited to have this much needed tool available.

As a teacher and as an ELA curriculum specialist, I know of no other writing tool available to teachers that is more helpful than MY Access. When I tell teachers that we will be using MY Access for instruction and not just benchmarking this year, the most common reply I receive is “Oh great! That means that I can teach a lot more writing!” Think about it - if a secondary teacher has 175 students (35 students in 5 periods) and the teacher takes 10 minutes to provide feedback on each student’s paper, then it would take the teacher 29 hours (1,750 minutes) to give effective feedback to his/her students. MY Access is a writing teacher’s best friend!

Jason Crabbe

Secondary Language Arts Curriculum Specialist

Santa Ana Unified School District

Arkansas State University “In 2018, our students performed poorly in style and mechanics. Other forms of intervention have not proven successful. We piloted IntelliWriter and IntelliMetric and produced great results. The University leadership has since implemented a full-scale rollout across all campuses” - Dr. Melodie Philhours, Arkansas State University

The United Nations The United Nations utilizes IntelliMetric® via the Adaptera Platform for real-time evaluation of personnel writing assessments, offering a cost-effective solution to ensure communication skills in the workforce.

The United Nations, Department of Homeland Security and the world's largest online retail store all access IntelliMetric ® for immediate scoring of personnel writing assessments scored by IntelliMetric ® using the Adaptera Platform. In a world where clear concise communication is essential in the workforce using IntelliMetric ® to score writing assessments provides immediate, cost-effective evaluation of your employee skills.

IntelliMetric ® Offers Multilingual Scoring & Support

Score written responses in your native language with IntelliMetric! The automated scoring platform offers instant feedback and scoring in several languages to provide more accuracy and consistency than human scoring wherever you’re located. Accessible any time or place for educational or professional needs, IntelliMetric® is the perfect solution to your scoring requirements.

Intelliwriter

IntelliMetric-powered Solutions

Automated Essay Scoring using AI.

Online Writing Instruction and Assessment.

Adaptive Learning and Assessment Platform.

District Level Capture and Assessment of Student Writing.

AI-Powered Assessment and Instruction APIs.

Advanced AI-Driven Writing Mastery Tool.

- Read The Blog

Revolutionize Your Writing Process with Smodin AI Grader: A Smarter Way to get feedback and achieve academic excellence!

For Students

Stay ahead of the curve, with objective feedback and tools to improve your writing.

Your Virtual Tutor

Harness the expertise of a real-time virtual teacher who will guide every paragraph in your writing process, ensuring you produce an A+ masterpiece in a fraction of the time.

Unbiased Evaluation

Ensure an impartial and objective assessment, removing any potential bias or subjectivity that may be an influence in traditional grading methods.

Perfect your assignments

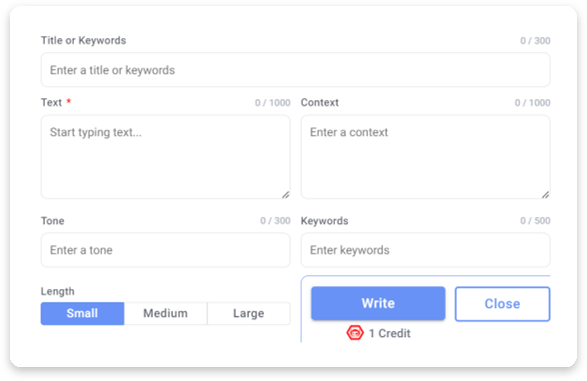

With the “Write with AI” tool, transform your ideas into words with a few simple clicks. Excel at all your essays, assignments, reports etc. and witness your writing skills soar to new heights

For teachers

Revolutionize your Teaching Methods

Spend less on grading

Embrace the power of efficiency and instant feedback with our cutting-edge tool, designed to save you time while providing a fair and unbiased evaluation, delivering consistent and objective feedback.

Reach out to more students

Upload documents in bulk and establish your custom assessment criteria, ensuring a tailored evaluation process. Expand your reach and impact by engaging with more students.

Focus on what you love

Let AI Grading handle the heavy lifting of assessments for you. With its data-driven algorithms and standardized criteria, it takes care of all your grading tasks, freeing up your valuable time to do what you're passionate about: teaching.

Grader Rubrics

Pick the systematic frameworks that work as guidelines for assessing and evaluating the quality, proficiency, and alignment of your work, allowing for consistent and objective grading without any bias.

Analytical Thinking

Originality

Organization

Focus Point

Write with AI

Set your tone and keywords, and generate brilliance through your words

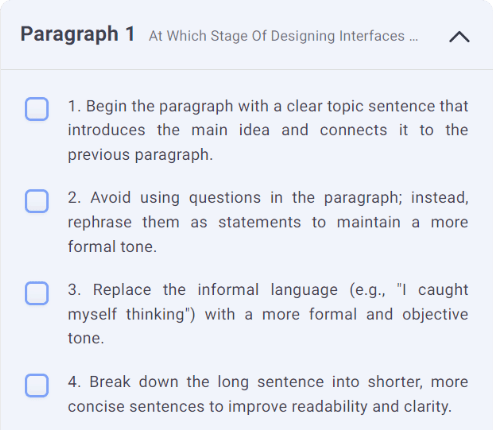

AI Grader Average Deviation from Real Grade

Our AI grader matches human scores 82% of the time* AI Scores are 100% consistent**

Deviation from real grade (10 point scale)

Graph: A dataset of essays were graded by professional graders on a range of 1-10 and cross-referenced against the detailed criteria within the rubric to determine their real scores. Deviation was defined by the variation of scores from the real score. The graph contains an overall score (the average of all criterias) as well as each individual criteria. The criteria are the premade criteria available on Smodin's AI Grader, listed in the graph as column headings. The custom rubrics were made using Smodin's AI Grader custom criteria generator to produce each criteria listed in Smodin's premade criterias (the same criteria as the column headings). The overall score for Smodin Premade Rubrics matched human scores 73% of the time with our advanced AI, while custom rubrics generated by Smodin's custom rubric generator matched human grades 82% of the time with our advanced AI. The average deviation from the real scores for all criteria is shown above.

* Rubrics created using Smodin's AI custom criteria matched human scores 82% of the time on the advanced AI setting. Smodin's premade criteria matched human scores 73% of the time. When the AI score differed from the human scores, 86% of the time the score only differed by 1 point on a 10 point scale.

** The AI grader provides 100% consistency, meaning that the same essay will produce the same score every time it's graded. All grades used in the data were repeated 3 times and produced 100% consistency across all 3 grading attempts.

AI Feedback

Unleash the Power of Personalized Feedback: Elevate Your Writing with the Ultimate Web-based Feedback Tool

Elevate your essay writing skills with Smodin AI Grader, and achieve the success you deserve with Smodin. the ultimate AI-powered essay grader tool. Whether you are a student looking to improve your grades or a teacher looking to provide valuable feedback to your students, Smodin has got you covered. Get objective feedback to improve your essays and excel at writing like never before! Don't miss this opportunity to transform your essay-writing journey and unlock your full potential.

Smodin AI Grader: The Best AI Essay Grader for Writing Improvement

As a teacher or as a student, writing essays can be a daunting task. It takes time, effort, and a lot of attention to detail. But what if there was a tool that could make the process easier? Meet Smodin Ai Grader, the best AI essay grader on the market that provides objective feedback and helps you to improve your writing skills.

Objective Feedback with Smodin - The Best AI Essay Grader

Traditional grading methods can often be subjective, with different teachers providing vastly different grades for the same piece of writing. Smodin eliminates this problem by providing consistent and unbiased feedback, ensuring that all students are evaluated fairly. With advanced algorithms, Smodin can analyze and grade essays in real-time, providing instant feedback on strengths and weaknesses.

Improve Your Writing Skills with Smodin - The Best AI Essay Grader

Smodin can analyze essays quickly and accurately, providing detailed feedback on different aspects of your writing, including structure, grammar, vocabulary, and coherence. By identifying areas that need improvement and providing suggestions on how to make your writing more effective, if Smodin detects that your essay has a weak thesis statement, it will provide suggestions on how to improve it. If it detects that your essay has poor grammar, it will provide suggestions on how to correct the errors. This makes it easier for you to make improvements to your essay and get better grades and become a better writer.

Smodin Ai Grader for Teachers - The Best Essay Analysis Tool

For teachers, Smodin can be a valuable tool for grading essays quickly and efficiently, providing detailed feedback to students, and helping them improve their writing skills. With Smodin Ai Grader, teachers can grade essays in real-time, identify common errors, and provide suggestions on how to correct them.

Smodin Ai Grader for Students - The Best Essay Analysis Tool

For students, Smodin can be a valuable tool for improving your writing skills and getting better grades. By analyzing your essay's strengths and weaknesses, Smodin can help you identify areas that need improvement and provide suggestions on how to make your writing more effective. This can be especially useful for students who are struggling with essay writing and need extra help and guidance.

Increase your productivity - The Best AI Essay Grader

Using Smodin can save you a lot of time and effort. Instead of spending hours grading essays manually or struggling to improve your writing without feedback, you can use Smodin to get instant and objective feedback, allowing you to focus on other important tasks.

Smodin is the best AI essay grader on the market that uses advanced algorithms to provide objective feedback and help improve writing skills. With its ability to analyze essays quickly and accurately, Smodin can help students and teachers alike to achieve better results in essay writing.

© 2024 Smodin LLC

The Criterion ® Online Writing Evaluation Service streamlines the student writing experience.

The Criterion ® Online Writing Evaluation Service is a web-based, instructor-led automated writing tool that helps students plan, write and revise their essays. It offers immediate feedback, freeing up valuable class time by allowing instructors to concentrate on higher-level writing skills.

Automatically scores students’ writing, so instructors save time

Provides immediate, online feedback to improve student writing skills

Includes detailed reports to help administrators track performance

It gives immediate feedback to students … which in turn motivates them to fix mistakes and raise their scores. Criterion can do in seconds what would take teachers hours if they hand-graded and edited essays.

~ Institution in Oregon

I have the opportunity to jump in while they are producing their first drafts to give them my own feedback without penalty — that is, before they submit for a grade. This program is especially helpful for my virtual students.

~ Institution in Florida

LICENSE THE CRITERION SERVICE IP

Enhance your offerings with the Criterion writing tool, part of the ETS ® Assessment Services, and become a distributor.

What is automated essay scoring?

Automated essay scoring (AES) is an important application of machine learning and artificial intelligence to the field of psychometrics and assessment. In fact, it’s been around far longer than “machine learning” and “artificial intelligence” have been buzzwords in the general public! The field of psychometrics has been doing such groundbreaking work for decades.

So how does AES work, and how can you apply it?

The first and most critical thing to know is that there is not an algorithm that “reads” the student essays. Instead, you need to train an algorithm. That is, if you are a teacher and don’t want to grade your essays, you can’t just throw them in an essay scoring system. You have to actually grade the essays (or at least a large sample of them) and then use that data to fit a machine learning algorithm. Data scientists use the term train the model , which sounds complicated, but if you have ever done simple linear regression, you have experience with training models.

There are three steps for automated essay scoring:

- Establish your data set (collate student essays and grade them).

- Determine the features (predictor variables that you want to pick up on).

- Train the machine learning model.

Here’s an extremely oversimplified example:

- You have a set of 100 student essays, which you have scored on a scale of 0 to 5 points.

- The essay is on Napoleon Bonaparte, and you want students to know certain facts, so you want to give them “credit” in the model if they use words like: Corsica, Consul, Josephine, Emperor, Waterloo, Austerlitz, St. Helena. You might also add other Features such as Word Count, number of grammar errors, number of spelling errors, etc.

- You create a map of which students used each of these words, as 0/1 indicator variables. You can then fit a multiple regression with 7 predictor variables (did they use each of the 7 words) and the 5 point scale as your criterion variable. You can then use this model to predict each student’s score from just their essay text.

Obviously, this example is too simple to be of use, but the same general idea is done with massive, complex studies. The establishment of the core features (predictive variables) can be much more complex, and models are going to be much more complex than multiple regression (neural networks, random forests, support vector machines).

Here’s an example of the very start of a data matrix for features, from an actual student essay. Imagine that you also have data on the final scores, 0 to 5 points. You can see how this is then a regression situation.

How do you score the essay?

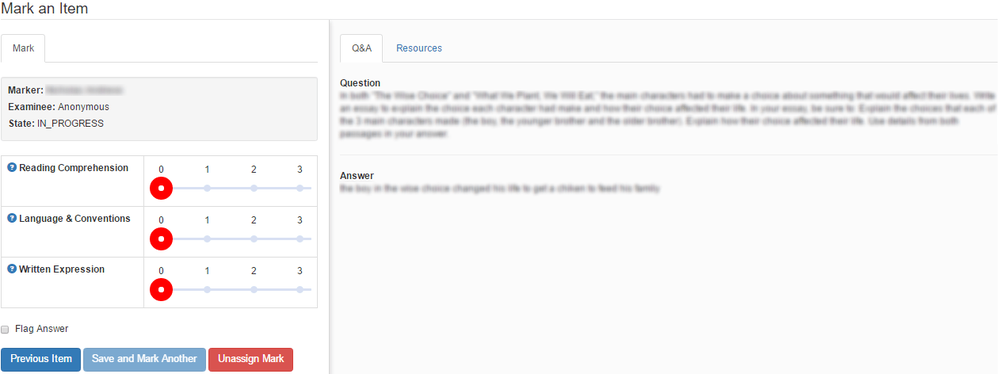

If they are on paper, then automated essay scoring won’t work unless you have an extremely good software for character recognition that converts it to a digital database of text. Most likely, you have delivered the exam as an online assessment and already have the database. If so, your platform should include functionality to manage the scoring process, including multiple custom rubrics. An example of our FastTest platform is provided below.

Some rubrics you might use:

- Supporting arguments

- Organization

- Vocabulary / word choice

How do you pick the Features?

This is one of the key research problems. In some cases, it might be something similar to the Napoleon example. Suppose you had a complex item on Accounting, where examinees review reports and spreadsheets and need to summarize a few key points. You might pull out a few key terms as features (mortgage amortization) or numbers (2.375%) and consider them to be Features. I saw a presentation at Innovations In Testing 2022 that did exactly this. Think of them as where you are giving the students “points” for using those keywords, though because you are using complex machine learning models, it is not simply giving them a single unit point. It’s contributing towards a regression-like model with a positive slope.

In other cases, you might not know. Maybe it is an item on an English test being delivered to English language learners, and you ask them to write about what country they want to visit someday. You have no idea what they will write about. But what you can do is tell the algorithm to find the words or terms that are used most often, and try to predict the scores with that. Maybe words like “jetlag” or “edification” show up in students that tend to get high scores, while words like “clubbing” or “someday” tend to be used by students with lower scores. The AI might also pick up on spelling errors. I worked as an essay scorer in grad school, and I can’t tell you how many times I saw kids use “ludacris” (name of an American rap artist) instead of “ludicrous” when trying to describe an argument. They had literally never seen the word used or spelled correctly. Maybe the AI model finds to give that a negative weight. That’s the next section!

How do you train a model?

Well, if you are familiar with data science, you know there are TONS of models, and many of them have a bunch of parameterization options. This is where more research is required. What model works the best on your particular essay, and doesn’t take 5 days to run on your data set? That’s for you to figure out. There is a trade-off between simplicity and accuracy. Complex models might be accurate but take days to run. A simpler model might take 2 hours but with a 5% drop in accuracy. It’s up to you to evaluate.

If you have experience with Python and R, you know that there are many packages which provide this analysis out of the box – it is a matter of selecting a model that works.

How well does automated essay scoring work?

Well, as psychometricians love to say, “it depends.” You need to do the model fitting research for each prompt and rubric. It will work better for some than others. The general consensus in research is that AES algorithms work as well as a second human, and therefore serve very well in that role. But you shouldn’t use them as the only score; of course, that’s impossible in many cases.

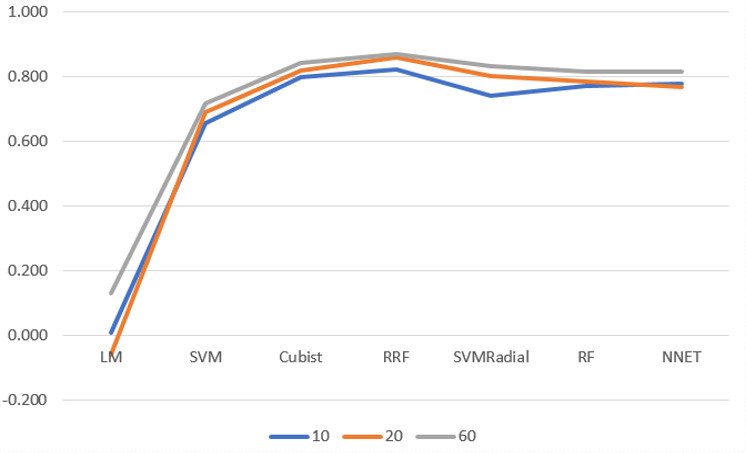

Here’s a graph from some research we did on our algorithm, showing the correlation of human to AES. The three lines are for the proportion of sample used in the training set; we saw decent results from only 10% in this case! Some of the models correlated above 0.80 with humans, even though this is a small data set. We found that the Cubist model took a fraction of the time needed by complex models like Neural Net or Random Forest; in this case it might be sufficiently powerful.

How can I implement automated essay scoring without writing code from scratch?

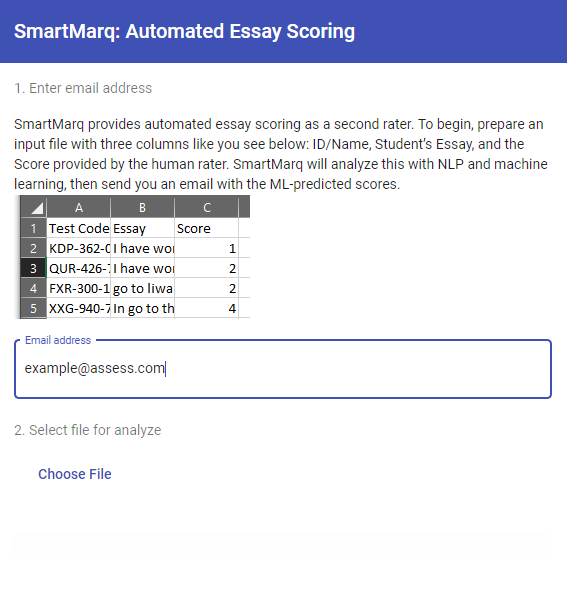

There are several products on the market. Some are standalone, some are integrated with a human-based essay scoring platform. ASC’s platform for automated essay scoring is SmartMarq; click here to learn more . It is currently in a standalone approach like you see below, making it extremely easy to use. It is also in the process of being integrated into our online assessment platform, alongside human scoring, to provide an efficient and easy way of obtaining a second or third rater for QA purposes.

Want to learn more? Contact us to request a demonstration .

- Latest Posts

Nathan Thompson, PhD

Latest posts by nathan thompson, phd ( see all ).

- Why PARCC EBSR Items Provide Bad Data - May 16, 2024

- What is a T score? - April 15, 2024

- Item writing: Tips for authoring test questions - April 12, 2024

Online Assessment

Psychometrics.

Introducing Gradescope

Gradescope is the transformative paper-to-digital grading platform that revolutionizes the way you approach grading and assessment.

Discover the power of Gradescope

With Gradescope, you can unlock the power of scalable reporting, harness the potential of data collection, and seamlessly integrate our platform with other crucial institutional systems, such as Learning Management Systems (LMSs). This seamless integration ensures that your institution's investment is maximized, and central support becomes a cornerstone of your grading workflows.

The core of Gradescope's mission is elevating both instructors and students. With our pioneering grading platform, instructors become more impactful, delivering faster, clearer, and more consistent feedback. Anonymous grading reduces unintended bias, fostering an environment of equitable evaluation. Customized accommodations ensure that every student has the opportunity to excel, driving a level playing field.

Unveiling benefits at every turn

With Gradescope’s grading platform, instructors and administrators can:

By utilizing "show your work" assessments, instructors gain swift insights into learning outcomes, aligning seamlessly with accreditation standards and institutional objectives.

Embrace electronic returns that protect FERPA-sensitive data, ensuring a secure environment for both students and instructors.

Sync rosters and grades effortlessly between Gradescope and your Learning Management System (LMS), ensuring a seamless experience for instructors and students alike.

Experience Gradescope for yourself

By completing this form, you agree to Turnitin's Privacy Policy . Turnitin uses the information you provide to contact you with relevant information. You may unsubscribe from these communications at any time.

Recaptcha Error

Subscribe to the PwC Newsletter

Join the community, add a new evaluation result row, automated essay scoring.

26 papers with code • 1 benchmarks • 1 datasets

Essay scoring: Automated Essay Scoring is the task of assigning a score to an essay, usually in the context of assessing the language ability of a language learner. The quality of an essay is affected by the following four primary dimensions: topic relevance, organization and coherence, word usage and sentence complexity, and grammar and mechanics.

Source: A Joint Model for Multimodal Document Quality Assessment

Benchmarks Add a Result

Most implemented papers, automated essay scoring based on two-stage learning.

Current state-of-art feature-engineered and end-to-end Automated Essay Score (AES) methods are proven to be unable to detect adversarial samples, e. g. the essays composed of permuted sentences and the prompt-irrelevant essays.

A Neural Approach to Automated Essay Scoring

nusnlp/nea • EMNLP 2016

SkipFlow: Incorporating Neural Coherence Features for End-to-End Automatic Text Scoring

Our new method proposes a new \textsc{SkipFlow} mechanism that models relationships between snapshots of the hidden representations of a long short-term memory (LSTM) network as it reads.

Neural Automated Essay Scoring and Coherence Modeling for Adversarially Crafted Input

Youmna-H/Coherence_AES • NAACL 2018

We demonstrate that current state-of-the-art approaches to Automated Essay Scoring (AES) are not well-suited to capturing adversarially crafted input of grammatical but incoherent sequences of sentences.

Co-Attention Based Neural Network for Source-Dependent Essay Scoring

This paper presents an investigation of using a co-attention based neural network for source-dependent essay scoring.

Language models and Automated Essay Scoring

In this paper, we present a new comparative study on automatic essay scoring (AES).

Evaluation Toolkit For Robustness Testing Of Automatic Essay Scoring Systems

midas-research/calling-out-bluff • 14 Jul 2020

This number is increasing further due to COVID-19 and the associated automation of education and testing.

Prompt Agnostic Essay Scorer: A Domain Generalization Approach to Cross-prompt Automated Essay Scoring

Cross-prompt automated essay scoring (AES) requires the system to use non target-prompt essays to award scores to a target-prompt essay.

Many Hands Make Light Work: Using Essay Traits to Automatically Score Essays

To find out which traits work best for different types of essays, we conduct ablation tests for each of the essay traits.

EXPATS: A Toolkit for Explainable Automated Text Scoring

octanove/expats • 7 Apr 2021

Automated text scoring (ATS) tasks, such as automated essay scoring and readability assessment, are important educational applications of natural language processing.

- Share full article

Advertisement

Supported by

Digital Domain

The Algorithm Didn’t Like My Essay

By Randall Stross

- June 9, 2012

AS a professor and a parent, I have long dreamed of finding a software program that helps every student learn to write well. It would serve as a kind of tireless instructor, flagging grammatical, punctuation or word-use problems, but also showing the way to greater concision and clarity.

Now, unexpectedly, the desire to make the grading of tests less labor-intensive may be moving my dream closer to reality.

The standardized tests administered by the states at the end of the school year typically have an essay-writing component, requiring the hiring of humans to grade them one by one. This spring, the William and Flora Hewlett Foundation sponsored a competition to see how well algorithms submitted by professional data scientists and amateur statistics wizards could predict the scores assigned by human graders. The winners were announced last month — and the predictive algorithms were eerily accurate.

The competition was hosted by Kaggle, a Web site that runs predictive-modeling contests for client organizations — thus giving them the benefit of a global crowd of data scientists working on their behalf. The site says it “has never failed to outperform a pre-existing accuracy benchmark, and to do so resoundingly.”

Kaggle’s tagline is “We’re making data science a sport.” Some of its clients offer sizable prizes in exchange for the intellectual property used in the winning models. For example, the Heritage Health Prize (“Identify patients who will be admitted to a hospital within the next year, using historical claims data”) will bestow $3 million on the team that develops the best algorithm.

The essay-scoring competition that just concluded offered a mere $60,000 as a first prize, but it drew 159 teams. At the same time, the Hewlett Foundation sponsored a study of automated essay-scoring engines now offered by commercial vendors. The researchers found that these produced scores effectively identical to those of human graders.

Barbara Chow, education program director at the Hewlett Foundation, says: “We had heard the claim that the machine algorithms are as good as human graders, but we wanted to create a neutral and fair platform to assess the various claims of the vendors. It turns out the claims are not hype.”

If the thought of an algorithm replacing a human causes queasiness, consider this: In states’ standardized tests, each essay is typically scored by two human graders; machine scoring replaces only one of the two. And humans are not necessarily ideal graders: they provide an average of only three minutes of attention per essay, Ms. Chow says.

We are talking here about providing a very rough kind of measurement, the assignment of a single summary score on, say, a seventh grader’s essay, not commentary on the use of metaphor in a college senior’s creative writing seminar.

Software sharply lowers the cost of scoring those essays — a matter of great importance because states have begun to toss essay evaluation to the wayside.

“A few years back, almost all states evaluated writing at multiple grade levels, requiring students to actually write,” says Mark D. Shermis , dean of the college of education at the University of Akron in Ohio. “But a few, citing cost considerations, have either switched back to multiple-choice format to evaluate or have dropped writing evaluation altogether.”

As statistical models for automated essay scoring are refined, Professor Shermis says, the current $2 or $3 cost of grading each one with humans could be virtually eliminated, at least theoretically.

As essay-scoring software becomes more sophisticated, it could be put to classroom use for any type of writing assignment throughout the school year, not just in an end-of-year assessment. Instead of the teacher filling the essay with the markings that flag problems, the software could do so. The software could also effortlessly supply full explanations and practice exercises that address the problems — and grade those, too.

Tom Vander Ark, chief executive of OpenEd Solutions , a consulting firm that is working with the Hewlett Foundation, says the cost of commercial essay-grading software is now $10 to $20 a student per year. But as the technology improves and the costs drop, he expects that it will be incorporated into the word processing software that all students use.

“Providing students with instant feedback about grammar, punctuation, word choice and sentence structure will lead to more writing assignments,” Mr. Vander Ark says, “and allow teachers to focus on higher-order skills.”

Teachers would still judge the content of the essays. That’s crucial, because it’s been shown that students can game software by feeding in essays filled with factual nonsense that a human would notice instantly but software could not.

When sophisticated essay-evaluation software is built into word processing software, Mr. Vander Ark predicts “an order-of-magnitude increase in the amount of writing across the curriculum.”

I SPOKE with Jason Tigg, a London-based member of the team that won the essay-grading competition at Kaggle. As a professional stock trader who uses very large sets of price data, Mr. Tigg says that “big data is what I do at work.” But the essay-scoring software that he and his teammates developed uses relatively small data sets and ordinary PCs — so the additional infrastructure cost for schools could be nil.

Student laptops don’t yet have the tireless virtual writing instructor installed. But improved statistical modeling brings that happy day closer.

Randall Stross is an author based in Silicon Valley and a professor of business at San Jose State University. E-mail: [email protected].

Essay-Grading Software Seen as Time-Saving Tool

- Share article

Jeff Pence knows the best way for his 7th grade English students to improve their writing is to do more of it. But with 140 students, it would take him at least two weeks to grade a batch of their essays.

So the Canton, Ga., middle school teacher uses an online, automated essay-scoring program that allows students to get feedback on their writing before handing in their work.

“It doesn’t tell them what to do, but it points out where issues may exist,” said Mr. Pence, who says the a Pearson WriteToLearn program engages the students almost like a game.

With the technology, he has been able to assign an essay a week and individualize instruction efficiently. “I feel it’s pretty accurate,” Mr. Pence said. “Is it perfect? No. But when I reach that 67th essay, I’m not real accurate, either. As a team, we are pretty good.”

With the push for students to become better writers and meet the new Common Core State Standards, teachers are eager for new tools to help out. Pearson, which is based in London and New York City, is one of several companies upgrading its technology in this space, also known as artificial intelligence, AI, or machine-reading. New assessments to test deeper learning and move beyond multiple-choice answers are also fueling the demand for software to help automate the scoring of open-ended questions.

Critics contend the software doesn’t do much more than count words and therefore can’t replace human readers , so researchers are working hard to improve the software algorithms and counter the naysayers.

While the technology has been developed primarily by companies in proprietary settings, there has been a new focus on improving it through open-source platforms. New players in the market, such as the startup venture LightSide and edX , the nonprofit enterprise started by Harvard University and the Massachusetts Institute of Technology, are openly sharing their research. Last year, the William and Flora Hewlett Foundation sponsored an open-source competition to spur innovation in automated writing assessments that attracted commercial vendors and teams of scientists from around the world. (The Hewlett Foundation supports coverage of “deeper learning” issues in Education Week .)

“We are seeing a lot of collaboration among competitors and individuals,” said Michelle Barrett, the director of research systems and analysis for CTB/McGraw-Hill, which produces the Writing Roadmap for use in grades 3-12. “This unprecedented collaboration is encouraging a lot of discussion and transparency.”

Mark D. Shermis, an education professor at the University of Akron, in Ohio, who supervised the Hewlett contest, said the meeting of top public and commercial researchers, along with input from a variety of fields, could help boost performance of the technology. The recommendation from the Hewlett trials is that the automated software be used as a “second reader” to monitor the human readers’ performance or provide additional information about writing, Mr. Shermis said.

“The technology can’t do everything, and nobody is claiming it can,” he said. “But it is a technology that has a promising future.”

‘Hot Topic’

The first automated essay-scoring systems go back to the early 1970s, but there wasn’t much progress made until the 1990s with the advent of the Internet and the ability to store data on hard-disk drives, Mr. Shermis said. More recently, improvements have been made in the technology’s ability to evaluate language, grammar, mechanics, and style; detect plagiarism; and provide quantitative and qualitative feedback.

The computer programs assign grades to writing samples, sometimes on a scale of 1 to 6, in a variety of areas, from word choice to organization. The products give feedback to help students improve their writing. Others can grade short answers for content. To save time and money, the technology can be used in various ways on formative exercises or summative tests.

The Educational Testing Service first used its e-rater automated-scoring engine for a high-stakes exam in 1999 for the Graduate Management Admission Test, or GMAT, according to David Williamson, a senior research director for assessment innovation for the Princeton, N.J.-based company. It also uses the technology in its Criterion Online Writing Evaluation Service for grades 4-12.

Over the years, the capabilities changed substantially, evolving from simple rule-based coding to more sophisticated software systems. And statistical techniques from computational linguists, natural language processing, and machine learning have helped develop better ways of identifying certain patterns in writing.

But challenges remain in coming up with a universal definition of good writing, and in training a computer to understand nuances such as “voice.”

In time, with larger sets of data, more experts can identify nuanced aspects of writing and improve the technology, said Mr. Williamson, who is encouraged by the new era of openness about the research.

“It’s a hot topic,” he said. “There are a lot of researchers and academia and industry looking into this, and that’s a good thing.”

High-Stakes Testing

In addition to using the technology to improve writing in the classroom, West Virginia employs automated software for its statewide annual reading language arts assessments for grades 3-11. The state has worked with CTB/McGraw-Hill to customize its product and train the engine, using thousands of papers it has collected, to score the students’ writing based on a specific prompt.

“We are confident the scoring is very accurate,” said Sandra Foster, the lead coordinator of assessment and accountability in the West Virginia education office, who acknowledged facing skepticism initially from teachers. But many were won over, she said, after a comparability study showed that the accuracy of a trained teacher and the scoring engine performed better than two trained teachers. Training involved a few hours in how to assess the writing rubric. Plus, writing scores have gone up since implementing the technology.

Automated essay scoring is also used on the ACT Compass exams for community college placement, the new Pearson General Educational Development tests for a high school equivalency diploma, and other summative tests. But it has not yet been embraced by the College Board for the SAT or the rival ACT college-entrance exams.

The two consortia delivering the new assessments under the Common Core State Standards are reviewing machine-grading but have not committed to it.

Jeffrey Nellhaus, the director of policy, research, and design for the Partnership for Assessment of Readiness for College and Careers, or PARCC, wants to know if the technology will be a good fit with its assessment, and the consortium will be conducting a study based on writing from its first field test to see how the scoring engine performs.

Likewise, Tony Alpert, the chief operating officer for the Smarter Balanced Assessment Consortium, said his consortium will evaluate the technology carefully.

Open-Source Options

With his new company LightSide, in Pittsburgh, owner Elijah Mayfield said his data-driven approach to automated writing assessment sets itself apart from other products on the market.

“What we are trying to do is build a system that instead of correcting errors, finds the strongest and weakest sections of the writing and where to improve,” he said. “It is acting more as a revisionist than a textbook.”

The new software, which is available on an open-source platform, is being piloted this spring in districts in Pennsylvania and New York.

In higher education, edX has just introduced automated software to grade open-response questions for use by teachers and professors through its free online courses. “One of the challenges in the past was that the code and algorithms were not public. They were seen as black magic,” said company President Anant Argawal, noting the technology is in an experimental stage. “With edX, we put the code into open source where you can see how it is done to help us improve it.”

Still, critics of essay-grading software, such as Les Perelman, want academic researchers to have broader access to vendors’ products to evaluate their merit. Now retired, the former director of the MIT Writing Across the Curriculum program has studied some of the devices and was able to get a high score from one with an essay of gibberish.

“My main concern is that it doesn’t work,” he said. While the technology has some limited use with grading short answers for content, it relies too much on counting words and reading an essay requires a deeper level of analysis best done by a human, contended Mr. Perelman.

“The real danger of this is that it can really dumb down education,” he said. “It will make teachers teach students to write long, meaningless sentences and not care that much about actual content.”

Sign Up for EdWeek Update

Edweek top school jobs.

Sign Up & Sign In

- Our Mission

How AI Can Enhance the Grading Process

Artificial intelligence tools, combined with human expertise, can help teachers save time when they’re reviewing student work.

After tucking my son into bed, I’m hit with the realization that since waking up at 6:00 a.m., I’ve not had a moment’s rest, nor have I managed more than brief exchanges with my wife. She’s deeply engrossed in grading sixth-grade math quizzes, a world away in her concentration. Shifting my focus, I dive into assessing a substantial stack of high school history essays, with a firm deadline to return them by tomorrow.

The punctuality I expect from my students is the same standard I set for myself in returning their assignments. Missing a deadline isn’t an option, unless unexpected events or an illness intervenes. In such cases, I may offer bonus points or postpone future deadlines. More than just a pledge, this is my commitment to their success, requiring their best effort and guaranteeing mine in return.

Enhancing Efficiency, Precision, and Fairness

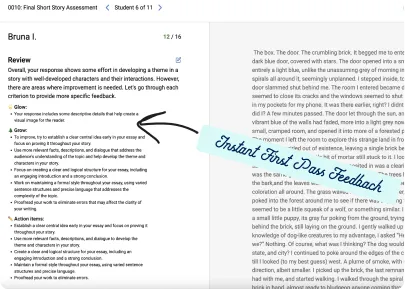

Late that night, CoGrader —a new artificial intelligence (AI)–enhanced platform—piques my interest. A notification on social media directs me to their website, boasting a compelling promise: “Reduce grading time by 80% and provide instant feedback on student drafts.” The allure is heightened by the offer of a 30-day trial, free and without requiring a credit card.

Intrigued by this “AI copilot for teachers,” I sign up to see how its feedback stacks up against my own. I upload a student’s essay on Reconstruction, which I’ve already evaluated and annotated. The results astonish me with their accuracy and detail and are neatly presented in a customizable rubric.

“Your essay employs an organizational structure that shows the relationships between ideas, providing a cohesive analysis of the topic,” reads a portion of the written feedback. “You use transitions effectively to guide the reader through your argument.” This feedback mirrors my own observations, validating my assessment, and increasing my confidence in CoGrader.

CoGrader also deeply impresses me with its “Glow” section, which offers specific praise, and the guidance provided in its “Grow” counterpart, which provides similarly focused areas for improvement.

The inclusion of action items also fosters student inquiry, exemplified by one of the questions provided in my test upload: “How might you further enhance the connections between different sections of your essay to strengthen your argument?” This reflects my priority of encouraging critical thinking.

Even fatigued, I recognize that CoGrader’s value extends beyond saving time. By promising an “objective and fair grading system,” the platform provides a check against the unconscious biases that inadvertently influence grading, try as we might to curb them.

I dip into my caffeine stash and devote the rest of my waking hours to grading solo, yet I’m captivated by what CoGrader could offer me, my students, and the future of impactful feedback.

Annotated Feedback and Mitigating Teacher Guilt

The potential of CoGrader motivated me to contact its cofounder, Gabriel Adamante, to express my admiration and to get his thoughts about the rapidly advancing field of AI. I mentioned that I had expected something like CoGrader to emerge around the time of my son’s 10th or 11th birthday, not his fifth or sixth.

“I ask myself a lot of questions on what’s the responsible use of AI,” Adamante offered. “What is the line? I think we are living in the Wild West of AI right now. Everything is happening so fast, much faster than anyone would have expected. Like you said, you thought this would be five or six years away.”

I asked Adamante whether CoGrader, in this fast-paced environment, has plans to add annotated feedback on a student’s work.

“Honestly, I think we’re talking anywhere from three to five months until we do that,” Adamante said.

“That’s it?” I said in astonishment. “Really? I thought you were going to say three to five years.”

“No, no, no,” he replied. “It’s coming. It should come this year, in 2024. I won’t be happy if it comes in 2025.”

Adamante acknowledged the dizzying effect of AI development, understanding the potential discomfort among educators.

“Of course teachers are mad when students use AI to do their work,” Adamante said. “That makes sense to me because the purpose of a kid doing the work is that they do the work. That’s the purpose. They should write to get practice. The purpose of a teacher grading is not that they grade. The purpose of a teacher grading is that they provide feedback to the student so that the student learns faster. The purpose of grading is not grading itself, whereas the purpose of writing an essay is writing an essay, because you’re practicing.”

Teachers who use CoGrader without reviewing the feedback contradict its intended purpose, which is to scaffold, but not replace, the human element, Adamante explained. He regularly communicates with educators, urging them to carefully read the comments instead of quickly clicking “approve” and moving on to the next submission. Taking time to read the results not only ensures that the teacher agrees, but also keeps the teacher informed about their students’ strengths and weaknesses.

Lessons Learned with ChatGPT 4 and Transparency

Following our conversation, I’ve remained mindful of applying Adamante’s insights to my current use of ChatGPT 4 to aid in providing written feedback . While I avoid asking it to generate feedback on my behalf, I do seek its assistance in clarifying my overarching comments and ensuring their coherence. With the paid subscription, I can even upload a Microsoft Word or PDF document for more precise and detailed assistance. I always meticulously review any output before sharing it with my students.

Adamante’s reminder highlights the importance of transparency in my use of AI to enhance feedback for my students. While I’m not particularly unsettled by my use of ChatGPT 4 as an assistance tool, I realize that I haven’t been as forthcoming about it as I should be regarding when and how I utilize it. I must delve into this matter within my stated classroom policies and through class discussions.

I want students to understand that I always thoroughly review their work and that employing AI to aid in providing feedback doesn’t diminish my dedication. Rather, it’s about finding the most effective way to leverage technology to support their growth as writers and learners.

Reflecting on my discussion with Adamante, I’ve concluded that CoGrader’s precise feedback surpasses that of ChatGPT 4. Moreover, I foresee CoGrader further outshining it with the introduction of annotated feedback.

Now, if only I can get my wife on board with researching how AI can expedite and enhance her feedback on math work. We’d both get more rest and time together, before putting our son to sleep.

IMAGES

VIDEO

COMMENTS

GradeCam is a grader app that helps teachers score essays and papers using teacher-completed rubric forms. It saves time, provides instant feedback, and tracks standards for formative assessment.

In summary, ClassX's AI Essay Grader represents a groundbreaking leap in the evolution of educational assessment. By seamlessly integrating advanced AI technology with the art of teaching, this tool unburdens educators from the arduous task of essay grading, while maintaining the highest standards of accuracy and fairness.

Project Essay Grade by Measurement Incorporated (MI), is a great automated grading software that uses AI technology to read, understand, process and give you results. By the use of the advanced statistical techniques found in this software, PEG can analyze written prose, make calculations based on more than 300 measurements (fluency, diction ...

Transform grading into learning from anywhere with Gradescope's modern assessment platform, efficient grading workflows, and actionable student data. ... Papers In-depth investigations into the pressing issues of education and technology today. ... ExamSoft is the leading provider of assessment software for on-campus and remote programs. Similarity

The e-rater automated scoring engine uses AI technology and Natural Language Processing (NLP) to evaluate the writing proficiency of student essays by providing automatic scoring and feedback. The engine provides descriptive feedback on the writer's grammar, mechanics, word use and complexity, style, organization and more.

SmartMarq will streamline your essay marking process. SmartMarq makes it easy to implement large-scale, professional essay scoring. Reduce timelines for marking. Increase convenience by managing fully online. Implement business rules to ensure quality. Once raters are done, run the results through our AI to train a custom machine learning model ...

EssayGrader is an AI powered grading assistant that gives high quality, specific and accurate writing feedback for essays. On average it takes a teacher 10 minutes to grade a single essay, with EssayGrader that time is cut down to 30 seconds That's a 95% reduction in the time it takes to grade an essay, with the same results. Get started for free.

Once you're in, you'll experience saving countless hours and procrastination, and make grading efficient, fair, and helpful. CoGrader is the Free AI Essay Grader for teachers. Use AI to save 80% of time spent grading essays, and enhance student performance by providing instant and comprehensive feedback. CoGrader supports Narrative, Informative ...

ETS is a global leader in educational assessment, measurement and learning science. Our AI technology, such as the e-rater ® scoring engine, informs decisions and creates opportunities for learners around the world. The e-rater engine automatically: assess and nurtures key writing skills. scores essays and provides feedback on writing using a ...

EssayGrader is a tool powered by AI that provides accurate and helpful feedback based on the same rubrics used by the grading teacher. Its features include speedy grading, comprehensive feedback, estimated grades, focused feedback, organized essays, show, don't tell, and personalized approach. The tool offers an easy-to-use guide for better ...

Trusted by educational institutions for surpassing human expert scoring, IntelliMetric® is the go-to essay scoring platform for colleges and universities. IntelliMetric® also aids in hiring by identifying candidates with excellent communication skills. As an assessment API, it enhances software products and increases product value.

Our AI grader matches human scores 82% of the time*AI Scores are 100% consistent**. Standard AI Advanced AI. Deviation from real grade (10 point scale) Real grade. Graph: A dataset of essays were graded by professional graders on a range of 1-10 and cross-referenced against the detailed criteria within the rubric to determine their real scores.

The software uses artificial intelligence to grade student essays and short written answers, freeing professors for other tasks. The new service will bring the educational consortium into a ...

The Criterion ® Online Writing Evaluation Service is a web-based, instructor-led automated writing tool that helps students plan, write and revise their essays. It offers immediate feedback, freeing up valuable class time by allowing instructors to concentrate on higher-level writing skills. About the Criterion Service.

Nathan Thompson, PhDApril 25, 2023. Automated essay scoring (AES) is an important application of machine learning and artificial intelligence to the field of psychometrics and assessment. In fact, it's been around far longer than "machine learning" and "artificial intelligence" have been buzzwords in the general public!

PaperRater's online essay checker is built for easy access and straightforward use. Get quick results and reports to turn in assignments and essays on time. 2. Advanced Checks. Experience in-depth analysis and detect even the most subtle errors with PaperRater's comprehensive essay checker and grader. 3.

The core of Gradescope's mission is elevating both instructors and students. With our pioneering grading platform, instructors become more impactful, delivering faster, clearer, and more consistent feedback. Anonymous grading reduces unintended bias, fostering an environment of equitable evaluation. Customized accommodations ensure that every ...

Essay scoring: **Automated Essay Scoring** is the task of assigning a score to an essay, usually in the context of assessing the language ability of a language learner. The quality of an essay is affected by the following four primary dimensions: topic relevance, organization and coherence, word usage and sentence complexity, and grammar and mechanics.

Automated essay scoring (AES) is the use of specialized computer programs to assign grades to essays written in an educational setting.It is a form of educational assessment and an application of natural language processing.Its objective is to classify a large set of textual entities into a small number of discrete categories, corresponding to the possible grades, for example, the numbers 1 to 6.

As essay-scoring software becomes more sophisticated, it could be put to classroom use for any type of writing assignment throughout the school year, not just in an end-of-year assessment. Instead ...

Strengthen Writing Skills and Increase Student Confidence with MI Write. Our web-based learning environment saves teachers valuable time without sacrificing meaningful instruction and interaction with students. MI Write helps students in grades 3-12 to improve their writing through practice, timely feedback, and guided support anytime and anywhere.

Essay-Grading Software Seen as Time-Saving Tool. Jeff Pence knows the best way for his 7th grade English students to improve their writing is to do more of it. But with 140 students, it would take ...

Late that night, CoGrader —a new artificial intelligence (AI)-enhanced platform—piques my interest. A notification on social media directs me to their website, boasting a compelling promise: "Reduce grading time by 80% and provide instant feedback on student drafts.". The allure is heightened by the offer of a 30-day trial, free and ...